Summary

Most AI marketing vendors are building sophisticated solutions to problems that don't exist while ignoring the strategic challenges that actually need solving. This framework helps marketing leaders separate genuine AI value from expensive feature theater through five critical evaluation questions. Real strategic advantage comes from AI that amplifies human expertise rather than replacing it. The companies succeeding with AI marketing understand their workflows well enough to know exactly what they want technology to improve.

“Our AI can predict which leads will convert with 94.7% accuracy,” the vendor said, clicking through slides filled with neural network diagrams and machine learning buzzwords.

“Can you tell us which of our current leads will convert next week?” we asked.

Twenty minutes of hemming and hawing later, it became clear they couldn’t. Their “AI” required six months of historical data, perfect lead scoring hygiene, and a data science team to interpret outputs.

Meanwhile, our client’s sales director could predict conversions by looking at email response patterns and meeting behavior. No AI required.

That demo crystallized something we’d been suspecting: most AI marketing tools are elaborate solutions to problems that don’t actually exist.

The Great AI Marketing Gold Rush

Walk into any marketing conference and you’ll be bombarded with promises: “Increase conversion rates by 340%!” “Automate your entire content strategy!” “Predict customer behavior with unprecedented accuracy!”

Here’s what they don’t tell you: these tools are solving problems you probably don’t have while ignoring problems you definitely do have.

Problems you don’t have: Insufficient data (you’re drowning in it), lack of automation (everything’s already automated), missing predictive models (your gut instincts are probably better).

Problems you do have: Data that doesn’t connect to business outcomes, automation that creates more work than it saves, predictions that don’t help you make better decisions.

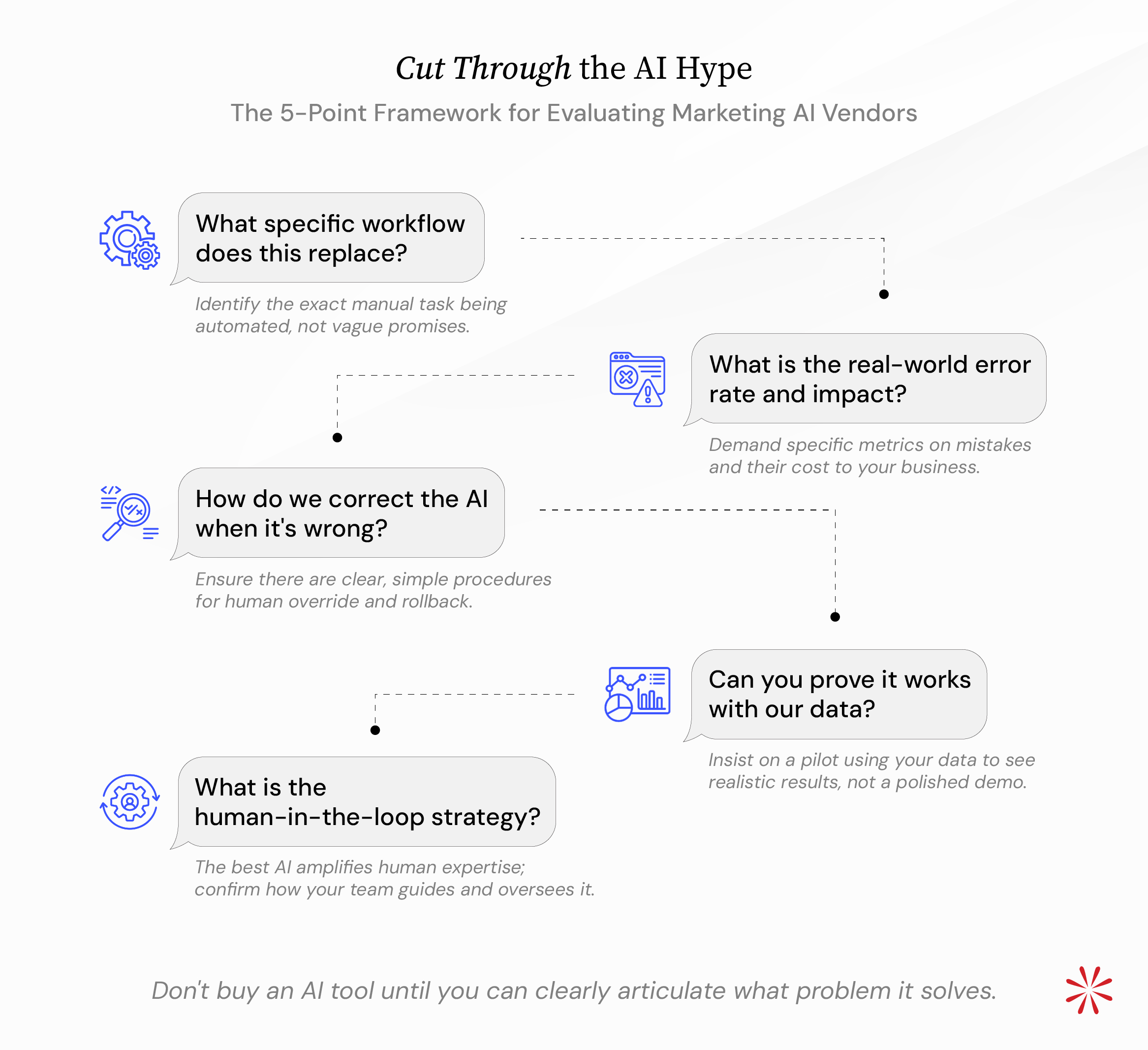

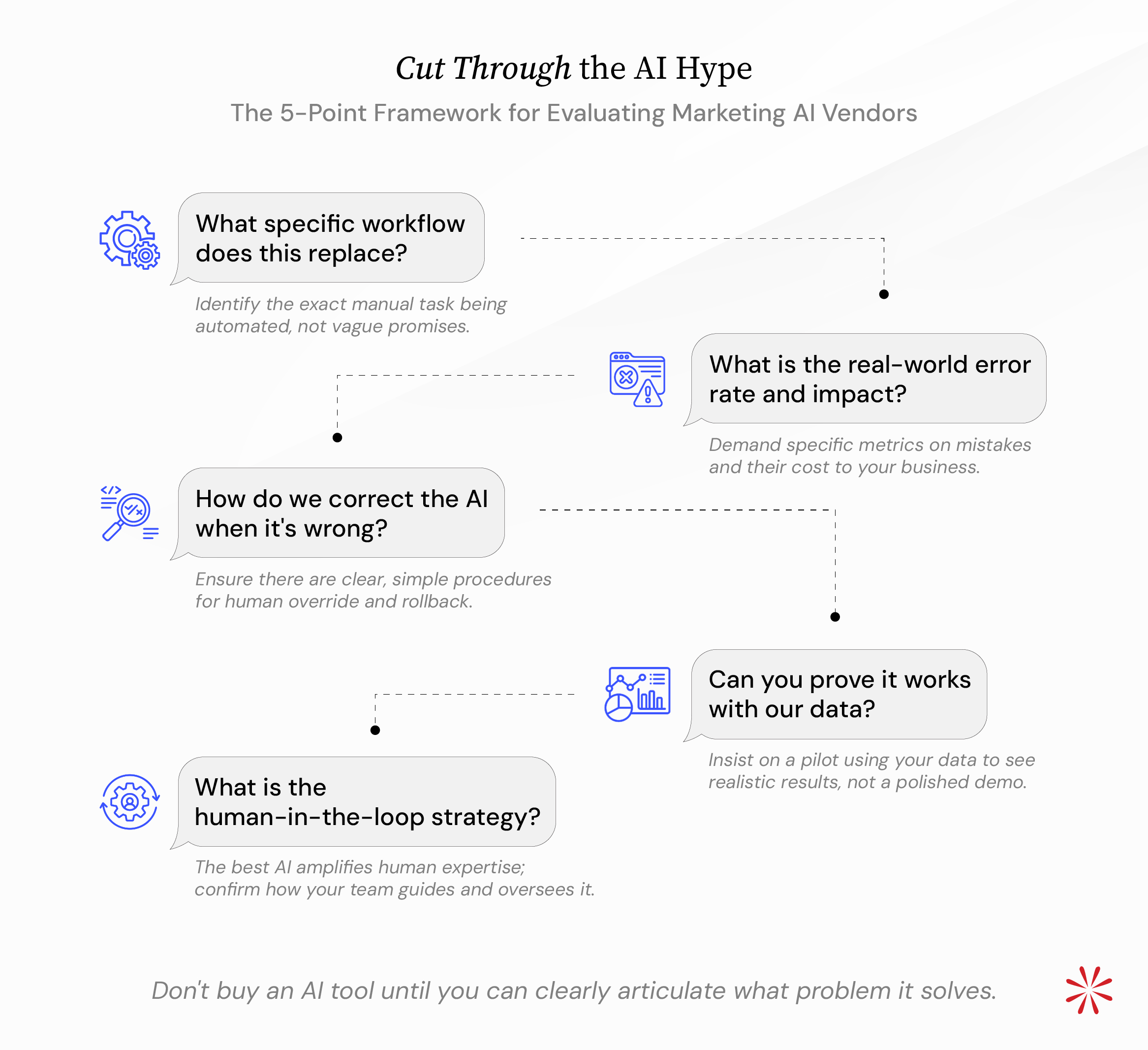

The QiWorks AI Vendor Reality Check Framework

Question 1: “What specific workflow does this replace?” The right answer: Clear, step-by-step description of manual work that will no longer need human intervention. The wrong answer: Vague promises about “optimization” or “enhancement.”

Question 2: “Show me the false positive rate.” Every AI system makes mistakes. The question is whether those mistakes cost more than the problems the AI solves. The right answer: Specific percentages and mitigation strategies. The wrong answer: “Our AI is 99.3% accurate” without defining what accuracy means.

Question 3: “What happens when your AI makes the wrong decision?” The right answer: Clear rollback procedures, human override capabilities, and audit trails. The wrong answer: “The AI learns from its mistakes” (translation: you become the beta tester).

Question 4: “Can you replicate this result with our actual data?” Demo magic is real. Your data is messier and more complex than their curated datasets. The right answer: Proof-of-concept using your actual data with realistic performance expectations. The wrong answer: “Every client sees similar results.”

Question 5: “What’s your human-in-the-loop strategy?” The best AI amplifies human intelligence rather than replacing it. The right answer: Clear workflows showing when humans intervene and how they override AI decisions. The wrong answer: “You won’t need humans once our AI is trained.”

Real-World Case Study

We evaluated an AI audience segmentation tool for a fintech client. Here’s how our framework played out:

- Workflow replacement: Would replace 4 hours of monthly manual segmentation. Pass.

- False positives: 89% accuracy claimed, but 34% of “high-value” segments contained low-engagement users. Fail.

- Error handling: No rollback capabilities. Manual override required rebuilding entire campaigns. Fail.

- Real data: Demo showed dramatic improvements, but pilot with client data showed only 12% improvement. Conditional pass.

- Human oversight: AI made decisions automatically with no review process. Fail.

Final decision: We recommended against the tool despite genuine AI capabilities.

Six months later: The client’s manual segmentation evolved to incorporate behavioral triggers we learned from evaluating the AI tool. Result: 28% engagement improvement at zero additional cost.

What Actually Works: Strategic AI Implementation

The real AI revolution won’t come from tools that replace human decision-making. It will come from tools that give humans better information to make better decisions.

We worked with a franchise network facing regional performance challenges—67% higher cost per lead in Eastern markets using identical Southern strategies. The tempting solution was standard AI approaches: sentiment analysis, content generators, predictive models.

The effective solution combined analytical intelligence with cultural behavior pattern recognition. AI-reinforced analysis identified specific regional variables that manual analysis would take months to uncover. Results: 340% improvement in engagement rates and 67% cost reduction across channels.

The AI wasn’t replacing strategic thinking—it was amplifying human expertise to identify which cultural variables actually mattered, enabling data-driven decisions at business speed.

Companies getting real value from AI marketing tools understand their workflows well enough to know exactly what they want AI to improve. They use the technology to enhance strategic capabilities rather than automate confusion.

Key Takeaways:

- Use the five-question framework to separate genuine value from feature theater

- Focus on AI that amplifies human intelligence rather than replacing it

- Insist on pilots with your actual data before any commitments

- Strategic AI implementation enhances decision-making capabilities

- The best solutions combine technological capability with strategic expertise

Ready to implement AI-reinforced marketing that drives measurable business results? Our embedded approach helps identify and implement intelligent automation that enhances strategic thinking without operational complexity.